The recent video of British Prime Minister Rishi Sunak and Microsoft’s billionaire co-founder Bill Gates answering questions generated by an AI chatbot, helped raise more noise about ChatGPT.

It was merely a good PR stunt, in the end. Two leaders in their respective fields being asked PR questions by an artificial intelligence didn’t showcase much, other than how this is but another tool to promote yourself by talking about safe subjects that put you in a favourable light. And it lets Gates shout out about yet another vaccine, this time for controlling malaria.

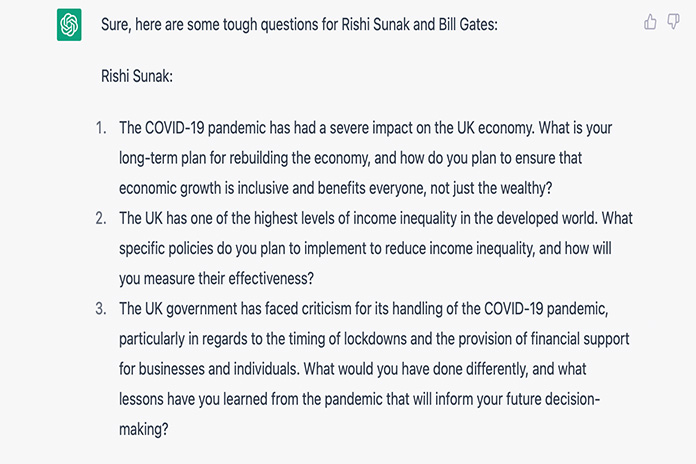

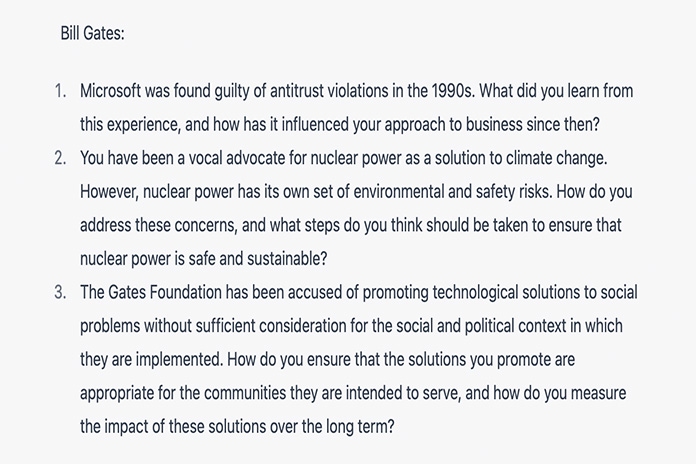

If they really wanted to test the mettle of ChatGPT, the two should have given the app free rein to ask some tough questions.

I asked ChatGPT to pose some tough questions to the gents, and this was what it coughed up.

Money To Be Made

Microsoft has put US$10B into OpenAI, the maker of ChatGPT, which was released for public testing in November 2022. Over a million people signed up to use the web-based AI chatbot in five days and examples of what it can do were quick to make the rounds of the Internet.

Eventually two factions formed. Those who predict ChatGPT will change their industry and the world for the better, and those who feel it will change their industry and the world for the worse. Either way, the horse has bolted and is not running to plan, necessarily.

While ChatGPT is being praised for its potential to assist in so many industries, it’s proving to be quite a sneaky operator. You can’t leave it to deal with the task at hand, expecting it to follow the plan you have in mind. It does hunt down information and comes up with some crackers. But it makes mistakes, too, since it is looking for information on the Internet, and even then only trawling up to 2021.

But, what’s probably more alarming is that AI chatbots make things up.

A friend asked ChatGPT about himself and was fed information that included a made up website. ChatGPT took it upon itself to try and figure out a plausible scenario. In this case a website URL that included my friend’s name. On realising the URL was available my friend is now considering getting it for himself. Maybe something good inadvertently came out of this. Or was it engineered?

If the track record of Google and Facebook are anything to go by, one of ChatGPT’s aims must be to become your indispensable friend who will eventually become an addiction that you wouldn’t mind selling your soul to. ChatGPT could full well have known my friend didn’t have that URL and decided to nudge him towards buying it.

It’s still learning about its own potential, so expect ChatGPT and other AI chatbots to pull out new tricks that will steal your breakfast, lunch and dinner and sell you back some crumbs.